OK..so your LinkedIn feed is exploding with "AI this," "Generative AI that," and a million takes on ChatGPT? It feels like this all this Generative AI tech crash-landed from another planet sometime in 2022 and now we are being taken over! Well, the reality: it didn't and it’s been a long time coming.

Generative AI – the magic that conjures up new text, images, and code – has roots stretching back further than your favorite pair of vintage jeans. It's a story built on decades of brilliant minds, bold experiments, a few dead ends, and relentless tinkering. Knowing this journey isn't just for tech geeks; it's crucial for you, as a business leader, to cut through the deafening hype and see where the real opportunities (and threats) lie.

So, refill your coffee, silence those notifications, and let’s take a quick, jargon-free trip down the memory lane of how we got to this AI-powered present. This is the 5-minute history brief you actually need.

1950’s-1960’s The Spark : "Can Machines Actually... Think?"

1950: The legendary Alan Turing posed a question that still echoes today: Can a machine behave so intelligently that you couldn't tell it apart from a human? His famous "Turing Test" laid the conceptual cornerstone for everything that followed.

1956: The term "Artificial Intelligence" was officially minted at the Dartmouth Workshop. Picture this: a gathering of the brightest minds, dreaming up a future where machines could reason. The AI field was officially born.

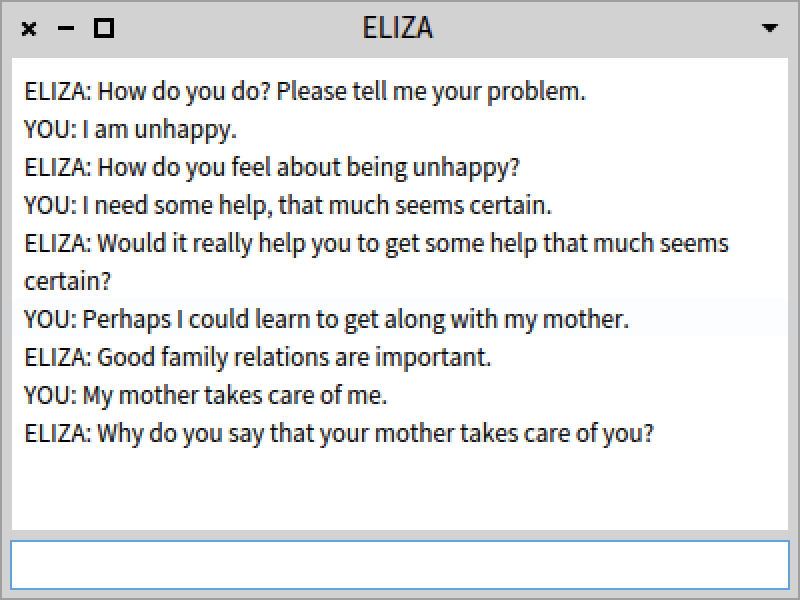

1966. MIT's Joseph Weizenbaum introduced ELIZA – the granddaddy of all chatbots. ELIZA was deceptively simple, often mimicking a psychotherapist. But it was a revelation! For the first time, many experienced what felt like a conversation with a machine, proving our relationship with intelligent-seeming tech has always been complicated.

Image: A conversation with ELIZA, one of the earliest chatbots, demonstrating early natural language processing. Source: Wikimedia Commons (Public Domain, https://commons.wikimedia.org/wiki/File:ELIZA_chatbot.png)

1970’s-1990’s: The Slow Burn & Neural Net Rise

After the initial buzz, AI development saw quieter periods – the "AI winters" – where funding dried up. But crucial groundwork was being laid.

The 1980s and 90s witnessed the resurgence of neural networks, algorithms inspired by the human brain. Key developments like Recurrent Neural Networks (RNNs) and especially Long Short-Term Memory (LSTM) networks (Hochreiter & Schmidhuber, 1997) gave machines the ability to "remember" information in sequences, vital for understanding language and speech.

2010’s: The Generative Dawn - GANs, Attention, & Transformers

This is where things really accelerated, setting the stage for today's AI tools.

2014: GANs Arrive. Ian Goodfellow, then a PhD student, unleashed Generative Adversarial Networks (GANs).

What is a GAN: Imagine two AIs in a creative battle - One (the Generator) creates fakes, while the other (the Discriminator) calls its bluff. This constant game forced the Generator to get incredibly good, leading to a boom in realistic AI-generated images. For businesses, this opened new avenues in creative content generation but also raised questions about deepfakes.

Overview of GAN Structure (Image Source: Google Developers, https://developers.google.com/machine-learning/gan/gan_structure)

Image Source: Google Developers, https://developers.google.com/machine-learning/gan/gan_structure

2017: "Attention Is All You Need." This Google research paper introduced the Transformer architecture. Before Transformers, AI models processed text word by word. Transformers, with their "attention mechanism," could look at an entire sentence (or document) at once, understanding context and word relationships. This was a monumental leap, making models drastically faster, more powerful, and the bedrock for almost every modern Large Language Model (LLM). For businesses, AI could finally understand and generate human language with previously unseen nuance.

2018: GPT-1. Building on Transformers, OpenAI released the first Generative Pre-trained Transformer (GPT). It wasn't perfect, but it signaled the dawn of powerful, general-purpose language models. The race was on.

The Mainstream Explosion (2020-Present): AI Enters Your Life

If the 2010s were the spark, the 2020s are the fireworks show.

2020: GPT-3 Changes Everything. OpenAI dropped GPT-3, and the tech world was stunned. Trained on a vast swathe of the internet, its ability to write coherent articles, translate, and even code showed the power of scale. Business leaders started to really pay attention.

2022: The Tipping Point. Generative AI crashed into public consciousness. Stable Diffusion (Stability AI) offered powerful open-source image generation, while ChatGPT (OpenAI) provided an easy-to-use interface for a highly capable LLM. Suddenly, everyone was an AI expert (or pretended to be).

2023: The AI Arms Race. The gloves came off. OpenAI launched GPT-4. Google countered with PaLM 2 and Gemini. Anthropic pushed Claude. Meta backed open-source with Llama 2. The pace became frantic.

Late 2024 - Early 2025: Specialization & Real-World Integration.

The AI frontier continues to expand.

While multimodal (We’ll cover this in another post - but in short it’s Generative AI that consumes and outputs, text, images and audio) models like OpenAI's Sora (video generation) capture headlines, we're also seeing a surge in specialized models excelling at specific tasks.

Deepseek Coder emerges, significantly boosting developer productivity with advanced code generation and completion.

Alibaba Cloud's Qwen-VL pushes the boundaries of vision-language understanding, impacting how AI interacts with visual data for businesses. Meanwhile, sophisticated AI agents like Manus (representing a new class of integrated, adaptive AI systems) begin to demonstrate more complex real-world task completion, hinting at a future of more autonomous AI in various industries.

The debate between powerful, closed proprietary models and increasingly capable open-source alternatives intensifies, offering businesses a wider, but also more complex, strategic landscape.

Why This History Matters to YOU (Yes, You, the Busy Leader)

Understanding this journey isn't just academic; it's strategic:

Not an Overnight Sensation (Manage Expectations): This tech is built on 70+ years of research. Real AI integration takes time and understanding, not just chasing hype.

Big Leaps from Big Ideas (Spot the Signals): GANs and Transformers were fundamental shifts. Keep an eye out for genuine breakthroughs; they signal future disruptions and opportunities.

Scale Matters (A Lot): The GPT-2 to GPT-3 jump showed that bigger models (more data, more compute) can yield surprisingly powerful results. This impacts resource allocation and competition.

Accessibility Drives Adoption (Make it Usable): ChatGPT's easy interface brought LLMs to millions. The most usable tech often wins. How can you make AI accessible to your customers and team?

Still Early Days (Seriously): Despite rapid progress, we're likely just scratching the surface, especially with multimodal AI and reasoning. The biggest transformations are probably still ahead. Are you ready?

So, next time someone talks about the "AI revolution," you'll have the context. It's not a sudden storm but a climate changing for decades. The recent explosion is built on persistent work. And the next chapter? It’s being written now, and you have a role to play.

Which part of AI's history surprised you, or what future development are you watching for your business? Reply to this email or drop a comment on X (@hashisiva).

💡 We are out of tokens for this week’s Context Window!

Thanks for reading!

Follow the author:

X at @hashisiva | LinkedIn